Artificial intelligence is no longer merely a technological tool designed to enhance efficiency or automate routine tasks. It is a revolutionary force reshaping how humanity produces wealth, exercises power, and organises personal and social life. What distinguishes AI from earlier technological revolutions is not its scope or speed, but its target: the imitation of advanced human cognition. Although the long-term consequences of this transition remain uncertain, one thing is already clear: no previous technological revolution has so directly challenged the centrality of the human brain itself.

Unlike machines that alleviated the need for physical labour or amplified human interactions, artificial intelligence encroaches upon human cognition, creativity, and judgment, domains that had never been fundamentally challenged by machines. As societies grapple with the possibilities and anxieties unleashed by this transformation, it becomes essential to understand how we arrived here. This timeline traces some of the key milestones in the development of artificial intelligence, illuminating the intellectual and technological pathways that have shaped the ongoing AI revolution.

Imitating Intelligence: Early Ideas and Foundations

1950: Alan Turing introduced the Imitation Game, framing machine intelligence as a question of indistinguishable behaviour rather than consciousness.

1956: Dartmouth Conference, organised by John McCarthy, Marvin Minsky, and others, coined the term “Artificial Intelligence” and formally launched AI as a research field.

1957: Frank Rosenblatt created the first artificial neural network, the Perceptron, capable of learning through trial and error.

1958: John McCarthy created Lisp, a programming language, which became the dominant language for early AI research due to its symbolic processing capabilities.

1966: Joseph Weizenbaum builds ELIZA, a natural language processing chatbot, showing how simple pattern-matching can mimic human conversation.

1969: SRI International developed the first “general-purpose” mobile robot, Shakey the Robot, capable of reasoning about its own actions to navigate a room.

1970s: Rule-based expert systems such as MYCIN demonstrate that computers can outperform humans in narrow, well-defined domains.

1971: Through Intel 4004, the first microprocessor, hardware miniaturisation begins the long road toward scalable computation.

1974-1980: The period known as “The First AI Winter”—funding and optimism declined as early promises failed to translate into real-world intelligence.

1980: AI became commercially viable through Expert Systems (XCON) that used rule-based logic to solve specific industry problems.

1980s: AI regains momentum as businesses deploy rule-based decision systems in medicine, finance, and manufacturing.

From Rules to Learning Machines

1986: Geoffrey Hinton and others popularised the algorithm, Backpropagation, allowing multi-layer neural networks to train effectively.

1987-1993: The period known as the “Second AI Winter”—high costs, brittle systems, and limited computing power triggered another failure in AI investment.

1997: Sepp Hochreiter and Jürgen Schmidhuber introduced Long Short-Term Memory (LSTM), enabling AI to “remember” long-term dependencies in data.

1997: IBM’s chess-playing computer, Deep Blue, defeats Russian chess grandmaster Garry Kasparov, marking a symbolic triumph for machine computation over human expertise.

1998: At the MIT Artificial Intelligence Laboratory, Dr Cynthia Breazeal developed a robot, Kismet, that could recognise and simulate human emotions, a milestone in social robotics.

2002: The first mass-market autonomous domestic robot, the Roomba, was developed by iRobot Corporation, bringing AI navigation into millions of homes.

Compute as Power: Scaling Artificial Intelligence

2006: Geoffrey Hinton and collaborators showed how multi-layer neural networks can be trained effectively.

2006: NVIDIA introduced Compute Unified Device Architecture (CUDA), allowing researchers to program Graphics Processing Units (GPUs) for non-graphic tasks.

2007-2010: NVIDIA’s GPUs dramatically accelerated neural network computation.

2012: AlexNet, a deep neural network, dramatically outperforms rivals in the ImageNet Large Scale Visual Recognition Challenge (ILSVRC), signalling the deep learning era.

2014: Ian Goodfellow proposes Generative Adversarial Networks (GANs), enabling machines to generate realistic images, audio, and video.

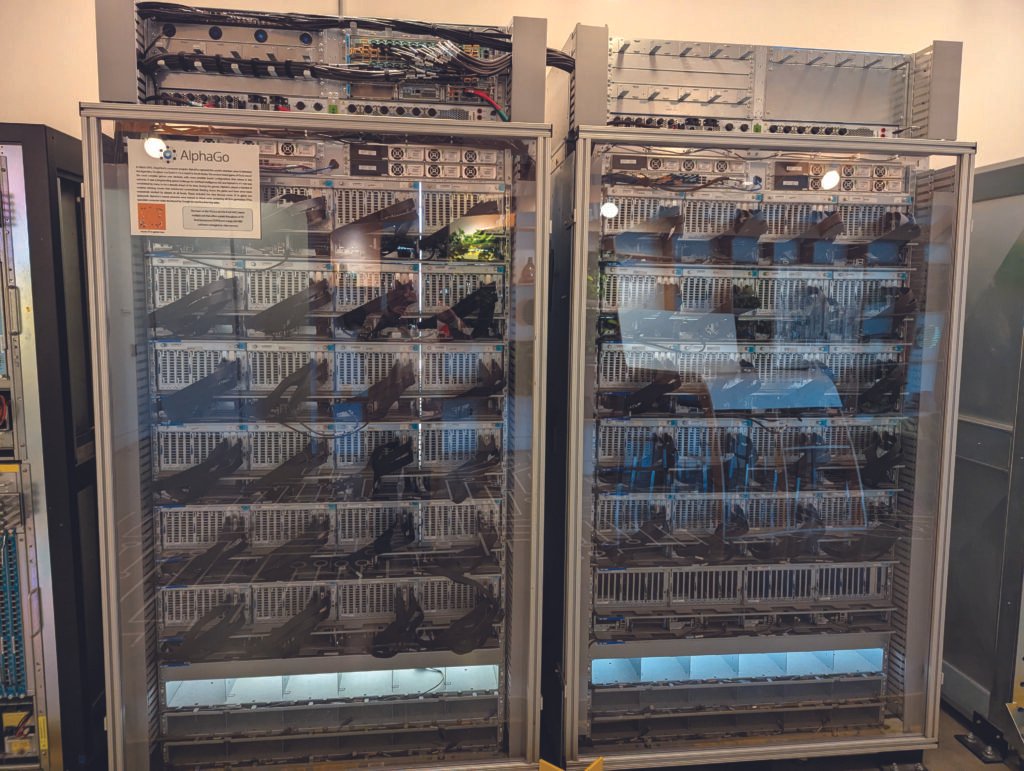

2016: DeepMind’s AlphaGo combines deep learning and reinforcement learning to master the game of Go and defeats South Korean professional Go player Lee Sedol.

2017: The landmark research paper titled “Attention Is All You Need”, authored by eight scientists working at Google, introduced the foundation for modern large language models.

Governing Intelligence: Ethics, Regulation, and Geopolitics

2017: International Telecommunication Union (ITU), a UN agency, organised the AI for Good Global Summit, marking the UN system’s first sustained global platform on artificial intelligence.

2018: Google’s BERT model significantly improves contextual language comprehension.

2018: UN Secretary-General establishes the High-level Panel on Digital Cooperation, formally bringing AI, data, and digital technologies into the UN’s global governance agenda.

2020: OpenAI, through GPT-3, demonstrates how scale alone can produce emergent linguistic and reasoning abilities.

2020s: NVIDIA GPUs (A100, H100) emerge as geopolitically sensitive infrastructure, shaping export controls, supply chains, and national AI strategies.

2021: DeepMind’s AlphaFold contributes to protein structure prediction and solves long-standing challenges in biology, accelerating drug discovery and life sciences research.

2021: UNESCO adopts the “Recommendation on the Ethics of Artificial Intelligence”, the first universal AI governance instrument and the first-ever global normative framework on AI ethics.

2022: Conversational AI reaches mass adoption through ChatGPT, reshaping education, work, and public debate.

2023: Generative models become embedded in search engines, office software, and creative platforms.

2024: The UN General Assembly adopts its first consensus resolution on artificial intelligence, affirming the need for “safe, secure, and trustworthy” AI for sustainable development.

2024: NVIDIA launched the Blackwell B200 GPU, representing a massive leap in hardware, specifically designed to support trillion-parameter “frontier” models.

2024: EU AI Act becomes law, making the world’s first comprehensive, legally binding framework for regulating AI based on risk levels, setting a global standard for AI governance.

2025: With the introduction of specialised autonomous agents, like OpenAI’s Operator and Google’s Project Jarvis, AI evolved from chatbots to agents, capable of using a computer, planning multi-step workflows, and executing tasks autonomously.

2025: Companies like Figure, Tesla (Optimus), and Boston Dynamics successfully integrated LLM “brains” into humanoid robots, enabling them to follow natural language commands in real-time.

2026: India is scheduled to host the India AI Impact Summit, marking the first global AI summit in the Global South, following earlier meetings in London, Seoul, and Paris.

Artificial intelligence is evolving rapidly through multiple breakthroughs and the layered accumulation of ideas, hardware capabilities, and institutional responses. Its recent growth trajectory suggests that AI-related developments are likely to have far-reaching consequences in the near future. Yet there remains no clear direction in policy thinking on the deeper questions of AI governance, accountability, and the inequalities it may generate. How societies choose to manage the evolution of AI—politically, ethically, and institutionally—will shape not only the future of this technology-led transformation but also the trajectory of our collective life.